Deep Learning - CNN - Convolutional Neural Network - CNN Architecture Tutorial

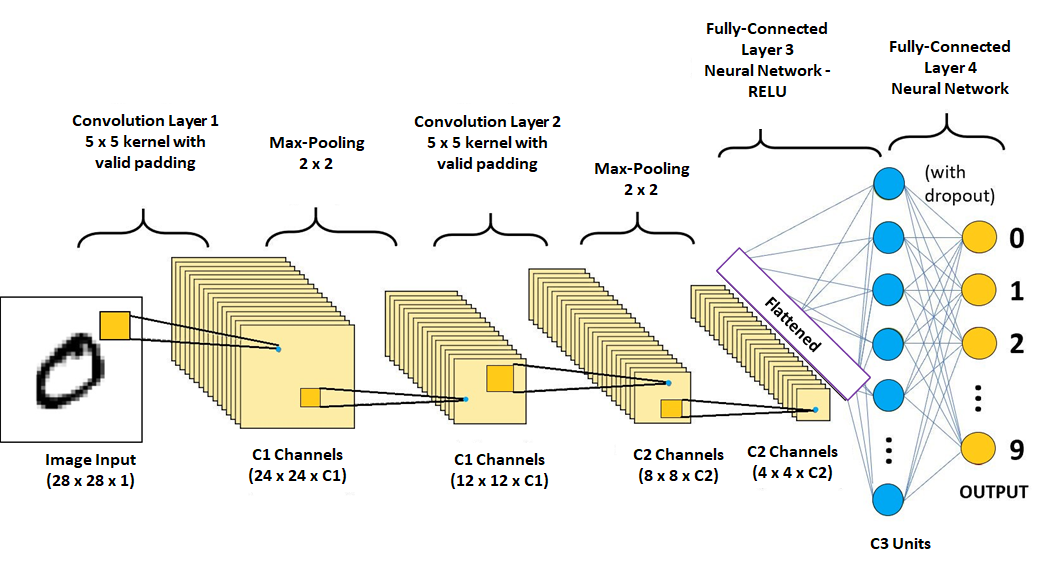

CNN Architecture - It has three layers namely convolutional, pooling, and a fully connected layer.

This is the most common architecture. we can also modify the architecture using convolution layer, filter mapping, stride, padding, FC nodes and layer, activation function, dropout, and batch normalization.

Different CNN architecture are-

ImageNET-

1] LeNET – Yann Lecunn

2] AlexNET

3] GoogleNET

4] VggNET

5] ResNET

6] Inception

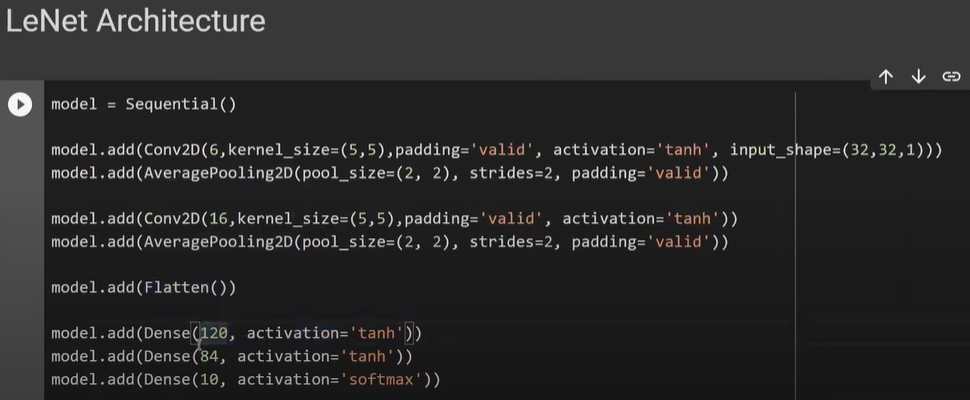

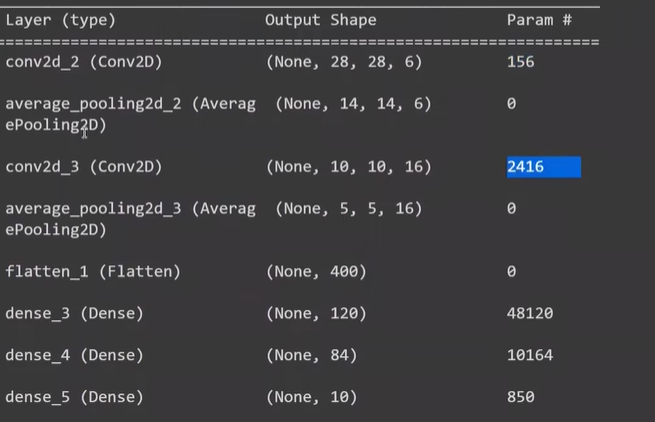

1] LeNET - 5

Input layer(32 x 32) →

Layer 1 → Convolutional layer(5 x 5)(6 - filter) + Average Pooling Layer( receptive field of size 2 x 2 and stride size of 2) - (28 x 28 x 6) to (14 x 14 x 6)

Layer 2 → Convolutional layer(5 x 5)(16 - filter) + Average Pooling Layer( receptive field of size 2 x 2 and stride size of 2) - (10 x 10 x 16) to (5 x 5 x 16) → Flatten(1-D tensor) - 400

Layer 3 → Fully Connected Layer(120 nodes) - 400 x 120

Layer 4 → Fully Connected Layer(84 nodes) - 120 x 84

Layer 5 → Softmax Layer - 84 x 10

model.summary()

That’s is the reason it is called LENET-5 (because of 5 layers)

CNN - Convolutional Neural Network VS ANN - Artificial Neural Network

The problem faced by ANN-

1] Computational Cost

2] Overfitting

3] Loss of important features like spatial arrangement of pixels

For Mnist Data,

In ANN, the Mnist image will be converted to 1D → then it is passed to a fully connected layer →, and then finally we will get a resultant answer

In CNN, the Mnist image is already in 2D shape → then it is passed to the filter(convolutional layer) → resultant will feature map, in feature map you will add bias, → and then you will send it to an activation function like RELU → then you will apply max pooling layer → flatten → fully connected layer → softmax.

The similarity is that ANN nodes and CNN filters seem to be similar. (both contain weight and bias).

Calculate the trainable parameter of CNN-

for 50 layers → each layer is of size → 3 x 3 x 3 = 27

total bias = each layer has one bias, for 50 layers there are 50 bias

total learnable parameter is = total weight + total bias = 27 x 50 + 50 =1350+50 = 1400

The trainable parameter in CNN does not depend on input size, it depends on filter size and the number of filters.

Difference-

If you change the input layer size, keeping the same CNN layer, then also trainable parameter is 1400,

because the trainable parameter does not depend on the input layer in the case of CNN

But in the case of ANN, as you increase the input layer size(image size) the trainable parameter will also increase.

Obviously if no. of trainable parameter increase, then computation cost increase, overfitting increase, and there is a loss of important feature like spatial arrangement of pixels(i.e 2D like CNN not 1D like ANN)