Deep Learning - RNN - Recurrent Neural Network - RNN Architecture & Forward Propagation Tutorial

Why RNN?

1] RNN - Required for sequential data of any length

ANN – requires fixed input, does not work for sequential data and use of zero padding also increases the cost of computation

2] RNN – Sequence contains some meaning

ANN – We lose sequential information.

RNN Architecture

Data in RNN are in the form (timesteps, input_features)

| Review Input | Sentiment |

|---|---|

| movie was good | 1 |

| movie was bad | 0 |

| movie was not good | 0 |

Vocabulary of 5 word

movie - [1, 0, 0, 0, 0]

was - [0, 1, 0, 0, 0]

good - [0, 0, 1, 0, 0]

bad - [0, 0, 0, 1, 0]

not - [0, 0, 0, 0, 1]

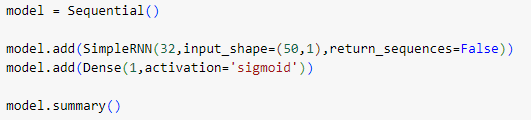

in Keras, Simple RNN is (batch-size, timesteps, and input_features) i.e. (3, 4, 5) as 3D Tensor in RNN

How to convert Text into Vector?

- Using Integer Encoding and Embedding

- Using Integer Encoding

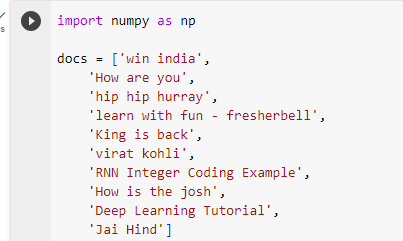

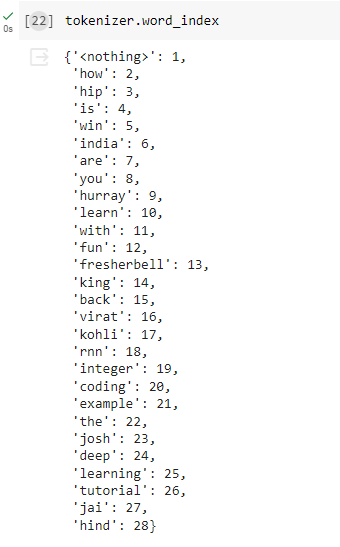

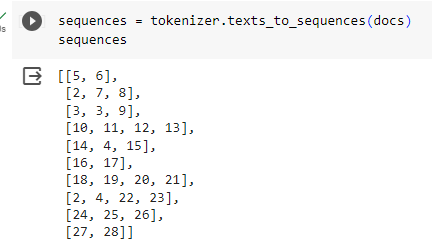

Step 1 - Create a Vocabulary of text using the unique word i.e tokenizing word using index number

| Text | Vector |

|---|---|

| Hi there | [1 2] |

| How are you | [3 4 5] |

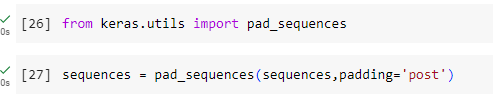

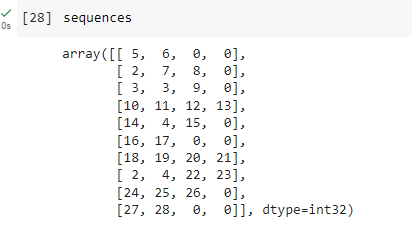

Step 2 - As the size of the text is different we will use padding to make it the same

| Text | Vector | After Padding |

|---|---|---|

| Hi there | [1 2] | [1 2 0] |

| How are you | [3 4 5] | [3 4 5] |

And then passing the sequence to fit into the model

Using Code - Integer Encoding RNN Practical

- Using Embedding

In Natural Language Processing, word embedding is a term used for the representation of words for text analysis, typically in the form of a real-valued vector that encodes the meaning of the word such that the words that are closer in the vector space are expected to be similar in meaning.

Using Code - Sentiment Analysis Practical